Read and Write Data From Azure Synapse to Azure Databricks

03 Nov 2020Introduction

Working with big datasets has introduced many more technologies for the average Data Analyst to learn and understand. I have recently started to work more in Azure Databricks to enable our processes to be considered more optimized. For example, I do a lot of string distance calculations on my datasets and, SQL Server or even Azure Synapse is not always the best solution for this. In the past, we created these functions as CLR’s and processed the data directly in SQL, but in time our data grew even more and, this became harder and harder. Moving this workload to Azure Databrick saved us hours of processing time, but introduced a new technology barrier that we had to learn and overcome.

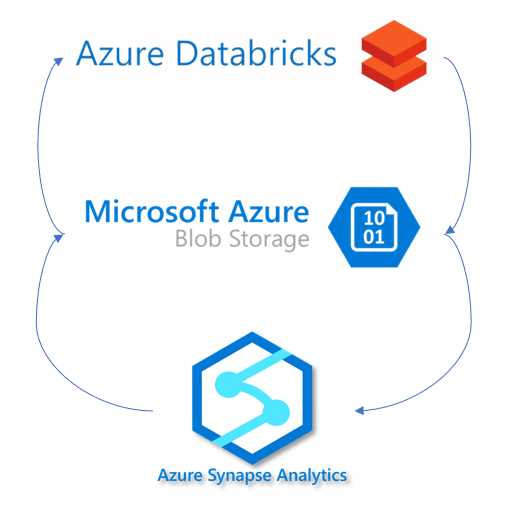

Below I go through the basic outline of what is required to load data from Azure Synapse to Azure Databricks and push down to Synapse again once done.

- Configure your BLOB storage access, this can be achieved in many other ways. Read more here

- Create the JDBC connection string and BLOB connection string

- Read the data from Azure Synapse into a Spark Dataframe using spark.read function

- Write transformed data back into Azure Synapse with spark.write

Code

CONFIGURE BLOB CREDENTIALS

spark.conf.set(

"fs.azure.account.key.<BLOBSTORAGENAME>.blob.core.windows.net",

"<ACCESSKEY>")

CONFIGURE JDBC AND BLOB PATH

jdbc = "jdbc:sqlserver://<YOURSERVERNAME>.database.windows.net:1433;database=<YOURDATABASENAME>;user=<SQLUSERNAME>@<YOURDATABASENAME>;password=<PASSWORD>;encrypt=true;trustServerCertificate=false;hostNameInCertificate=*.database.windows.net;loginTimeout=30;"

blob = "wasbs://<BLOBCONTAINER>@<BLOBSTORAGENAME>.blob.core.windows.net/"

READ DATA FROM SYNAPSE INTO DATAFRAME

df = spark.read \

.format("com.databricks.spark.sqldw") \

.option("url", jdbc) \

.option("tempDir", blob) \

.option("forwardSparkAzureStorageCredentials", "true") \

.option("Query", "SELECT TOP 1000 * FROM <> ORDER BY NEWID()") \

.load()

WRITE DATA FROM DATAFRAME BACK TO AZURE SYNAPSE

df.write \

.format("com.databricks.spark.sqldw") \

.option("url", jdbc) \

.option("forwardSparkAzureStorageCredentials", "true") \

.option("dbTable", "YOURTABLENAME") \

.option("tempDir", blob) \

.mode("overwrite") \

.save()

Disclaimer: Content is accurate at the time of publication, however updates and new additions happen frequently which could change the accuracy or relevance. Please keep this in mind when using my content as guidelines. Please always test in a testing or development environment, I do not accept any liability for damages caused by this content.

If you liked this post, you can share it with your followers or follow me on Twitter!